We all know that the world is going to IP for almost all communications. Despite this trend, it is a fact of life that a great deal of industrial communications still employ serial protocols such as RS232, RS422 and various flavours of RS485.

This is partly because of inertia due to the fact that a huge installed base of systems has mostly been working without problems for many years and partly because setting up a serial data comms link is usually pretty straightforward and doesn’t require advanced network design skills. While all this is mostly true it is also true that it helps to know what you are doing. And the first step to this is understanding the various standards that are used for most serial data links.

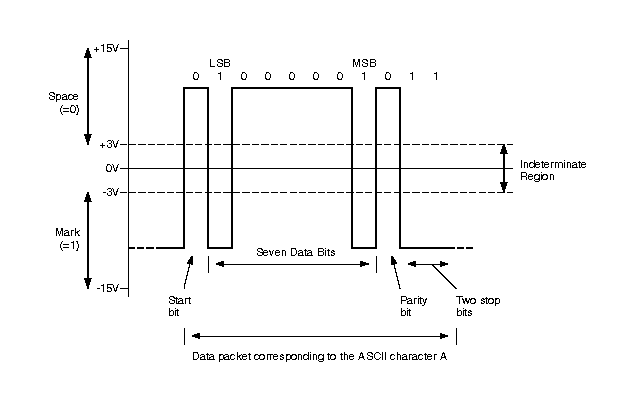

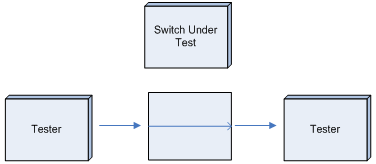

One of the most popular serial standards is RS232. This is a long established standard used for relatively low-speed serial data communications used only in single point to point transmission, for example, between your Personal Computer and its serial devices. RS232 typically is used to transmit data at up to 19.2kbps (occasionally higher) over distances of tens of meters. It is a single ended system meaning that it transmits the signal over one wire plus a ground return path as shown in Figure 1 below.

Figure 1

In the idle state, i.e. when no data is being transmitted, the driver output will be between -5V to -15V and change to +5V to +15V when a logical “0” is being transmitted. While the standard does not specify the state of the receiver’s output when its input is in the range -3V to +3V real RS232 line receivers generally have a threshold voltage of about +1.5V.

RS232 has three basic limitations:

- Electrical interference can be easily induced into the signal by external noise sources

- Interference (or crosstalk) between signals in the same cable rapidly increases with increasing cable length and/or data speeds to the point where the signal is unreadable

- Achievable distances are not great: typically 20 to 50 meters for data rates up to 19.2kbps with fairly ordinary cable and perhaps twice this with low capacitance cable.

As an example of really poor practice, OSD once had a customer using a single twisted pair to carry transmit and receive data at about 9.6kbps. The crosstalk made this unworkable after just a few meters!

Enter the world of RS422 and RS485. These two standards differ from RS232 in that they use differential data transmission which allows higher data rates over longer distances and with much greater noise immunity.

What exactly is differential data transmission?

RS232 uses only a single signal wire where a voltage level on that one wire is used to transmit/receive binary 1 and 0 as shown in Figure 1 above. On the other hand, differential transmission utilizes a pair of wires where a voltage difference is used to transmit/receive binary information. This means that electrical interference will affect both wires equally so that the differential signal is still clean provided the two wires are closely coupled. Which is why such systems use twisted pairs.

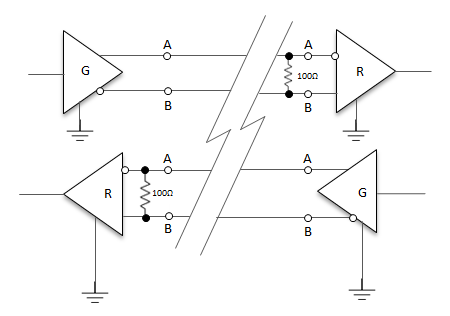

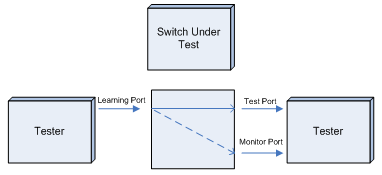

RS422 is primarily designed for point to point transmission, with one pair of wires for one direction and another pair for the other direction as shown in Figure 2 above. Normally the twisted pairs are terminated with a termination resistor equal to the characteristic impedance of the line, typically about 100 to 150Ω. It is possible to have multiple RS422 receivers connected (up to 10 using EIA standard receivers but more than 200 with many proprietary receivers) to the one driver but only one termination resistor is permissible.

Owing both to the intrinsic immunity to noise and crosstalk, and to the fact that twisted pairs have a well controlled characteristic impedance, transmission distances of RS422 are far greater than RS232: up to hundreds of meters at speeds as high as 10Mbps with high quality cable.

While RS422 is defined by the EIA Standard as usable in multi-drop applications it cannot be used to construct a truly multi-point network in which multiple transmitters and receivers exist in a “bus” configuration where any node can transmit or receive data. For this we need RS485.

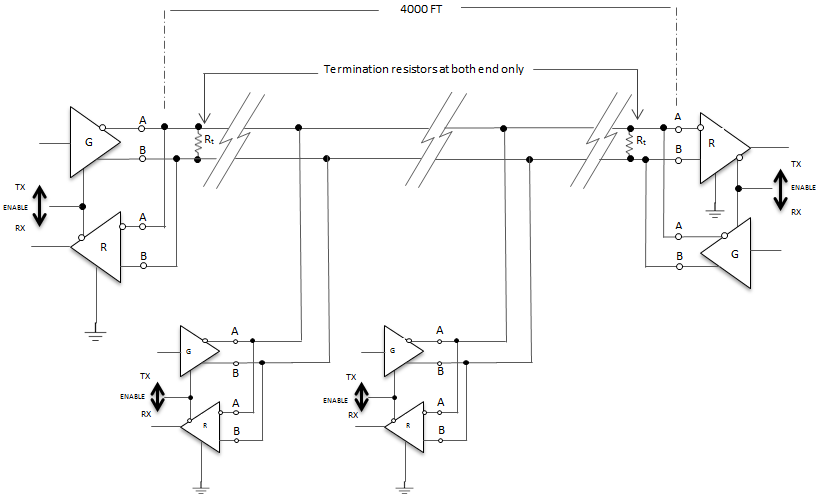

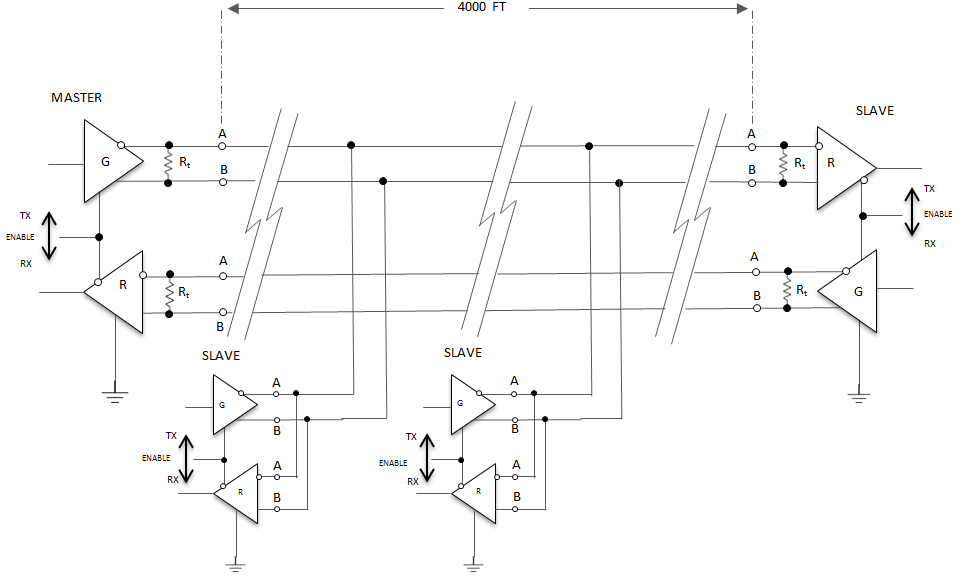

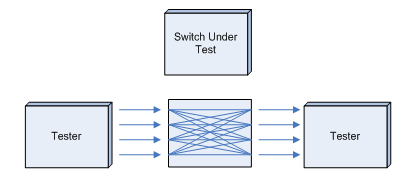

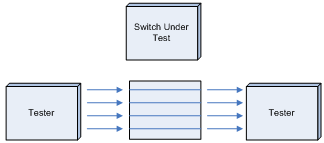

The RS485 standard allows up to 32 drivers and 32 receivers in a multi-point network configuration using just one or two twisted pairs as shown in Figure 3 below.

Figure 3 RS485 Two Wire Multidrop Network

2-wire RS485 allows for either Master-Slave or Peer-Peer operation with the limitation that data is transmitted in half duplex mode which means that only one device in the network can transmit at any one time.

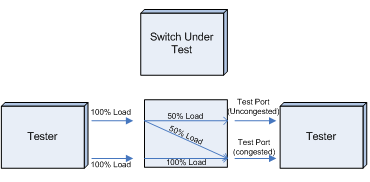

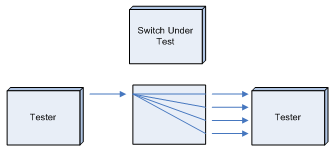

4-wire RS485 is normally used in Master-Slave networks in which one twisted pair transmits data only from the Master to all Slaves and the other twisted pair carries return data from the Slaves to the Master as shown in Figure 4 below.

2-wire 485 networks have the advantage of lower wiring costs whilst still allowing for nodes to communicate amongst themselves. On the downside, two-wire mode is limited to half-duplex and requires attention to turn-around delay. 4-wire networks allow full-duplex operation, but are limited to Master-Slave situations.

Figure 4 RS485 Four Wire Multidrop Network

One issue with both RS422 and RS485 is the need for a connection between the signal grounds of all devices in the network. This ground connection would not be necessary if the RS422 or RS485 receivers were perfect receivers of differential signals since they would be unaffected by the common mode voltage on the differential signal. Unfortunately, real receivers are not perfect and can usually only tolerate common mode voltages of about -7V to +12V. If the signal grounds are not connected it is possible for a common mode voltage of tens of volts peak to peak (typically induced 50 or 60Hz AC mains hum) to be riding on the differential signal. Sometimes such signals are enough to cause damage but more typically they just cause corruption of the data. It is common within the surveillance market to see no ground connection between RS485 type equipment. Such systems are basically relying on equipment safety grounds maintaining common mode voltages within the -7V to +12V range. They usually work but you should not rely on them unless techniques such as optical isolation are used. Some systems are actually galvanically isolated from each other and it is possible then to make a psuedo ground connection but such systems are few and far between.

A very major difference between RS422 and RS485 is due to the way RS485 drivers connect to the cable. RS422 drivers are always turned on, ie they are either logic High or logic Low and have an output impedance of 10 to 40 ohms in either state so it is not possible to have two such drivers connected to the same twisted pair: they’d just load each other down too much. So for multiple drivers on the one pair it is necessary that only one unit at a time is activated and the others are in a high impedance state (“tristated”) so they don’t load down the line. There are a number of ways of controlling this state which will not be covered here but a key consequence is that during periods of inactivity the line will be in a undetermined state. How do the RS485 transceivers in a network know when to receive and when to transmit?

The most common solution is that all units default to the “Listening” state in which their drivers are tristated: as soon as activity is detected on the line the unit receives the signal and its logic ensures that it cannot transmit while receiving. There is a turnaround time associated with this which is why many RS485 modems have an adjustable time which is typically about 10 X the bit period. For example, if a link is running at 9600 baud, the bit time is about 105uS so the time would be set to a minimum of 1mS. These times are usually not critical.

Biassing an RS485 Network

Connecting an RS485 network can have its issues. The EIA RS485 Specification labels the data wires “A” and “B”, although some manufacturers label these wires “+” and “-“. A reason as to why many systems have trouble transmitting and receiving data is due to this ambiguity. It is important to note that regardless of the polarity, it must be kept consistent throughout the whole network.

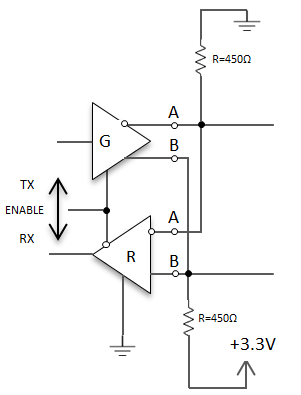

One issue with this is that when the bus is inactive it is important that it’s A and B wires be in a known state so that the first bit of a transmission is detected. This is why biasing resistors are used to pull the two wires into the inactive state with wire A pulled towards Ground and B is pulled towards +3.3V. As an example, in a 2-wire network of several nodes there will often be a 120Ω termination resistor at each end so to keep a reverse bias of 200mV there must be at least 3.5mA flowing through the 60Ω (two 120Ω in parallel) equivalent load. This means that with a 3.3V system the resistors must each be about 450Ω. This is shown in Figure 5 below.

Figure 5 Use of bias Resistors in a 2 Wire RS485 Network

In such systems it is essential that the same wires (A or B) are connected throughout the network as otherwise there can be a locked out condition which kills all transmission. Such a situation is often indicated by the modem indicators at each end of the link showing permanent a transmit state at one end with the other end showing a permanent receive state.

We have now very quickly covered the basics of RS422 and RS485. In the next Tech note we will discuss common problems out in the real world with these standards.